Publications

SCRAMSAC: Improving RANSAC's Efficiency with a Spatial Consistency Filter

Geometric verification with RANSAC has become a crucial step for many local feature based matching applications. Therefore, the details of its implementation are directly relevant for an application's run-time and the quality of the estimated results. In this paper, we propose a RANSAC extension that is several orders of magnitude faster than standard RANSAC and as fast as and more robust to degenerate configurations than PROSAC, the currently fastest RANSAC extension from the literature. In addition, our proposed method is simple to implement and does not require parameter tuning. Its main component is a spatial consistency check that results in a reduced correspondence set with a significantly increased inlier ratio, leading to faster convergence of the remaining estimation steps. In addition, we experimentally demonstrate that RANSAC can operate entirely on the reduced set not only for sampling, but also for its consensus step, leading to additional speed-ups. The resulting approach is widely applicable and can be readily combined with other extensions from the literature. We quantitatively evaluate our approach's robustness on a variety of challenging datasets and compare its performance to the state-of-the-art.

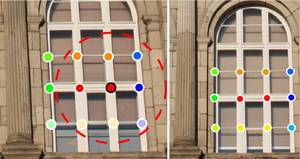

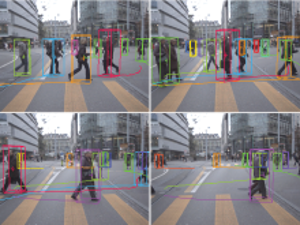

Robust Tracking-by-Detection Using a Detector Confidence Particle Filter

We propose a novel approach for multi-person tracking-by-detection in a particle filtering framework. In addition to final high-confidence detections, our algorithm uses the continuous confidence of pedestrian detectors and online trained, instance-specific classifiers as a graded observation model. Thus, generic object category knowledge is complemented by instance-specific information. A main contribution of this paper is the exploration of how these unreliable information sources can be used for multi-person tracking. The resulting algorithm robustly tracks a large number of dynamically moving persons in complex scenes with occlusions, does not rely on background modeling, and operates entirely in 2D (requiring no camera or ground plane calibration). Our Markovian approach relies only on information from the past and is suitable for online applications. We evaluate the performance on a variety of datasets and show that it improves upon state-of-the-art methods.

Feature-Centric Efficient Subwindow Search

Many object detection systems rely on linear classifiers embedded in a sliding-window scheme. Such exhaustive search involves massive computation. Efficient Subwindow Search (ESS) [11] avoids this by means of branch and bound. However, ESS makes an unfavourable memory tradeoff. Memory usage scales with both image size and overall object model size. This risks becoming prohibitive in a multiclass system. In this paper, we make the connection between sliding-window and Hough-based object detection explicit. Then, we show that the feature-centric view of the latter also nicely fits with the branch and bound paradigm, while it avoids the ESS memory tradeoff. Moreover, on-line integral image calculations are not needed. Both theoretical and quantitative comparisons with the ESS bound are provided, showing that none of this comes at the expense of performance.

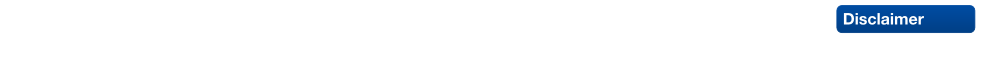

Using Multi-View Recognition and Meta-data Annotation to Guide a Robot's Attention

In the transition from industrial to service robotics, robots will have to deal with increasingly unpredictable and variable environments. We present a system that is able to recognize objects of a certain class in an image and to identify their parts for potential interactions. The method can recognize objects from arbitrary viewpoints and generalizes to instances that have never been observed during training, even if they are partially occluded and appear against cluttered backgrounds. Our approach builds on the Implicit Shape Model of Leibe et al. (2008). We extend it to couple recognition to the provision of meta-data useful for a task and to the case of multiple viewpoints by integrating it with the dense multi-view correspondence finder of Ferrari et al. (2006). Meta-data can be part labels but also depth estimates, information on material types, or any other pixelwise annotation. We present experimental results on wheelchairs, cars, and motorbikes.

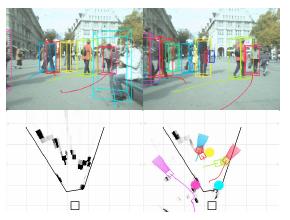

Robust Multi-Person Tracking from a Mobile Platform

In this paper, we address the problem of multi-person tracking in busy pedestrian zones using a stereo rig mounted on a mobile platform. The complexity of the problem calls for an integrated solution that extracts as much visual information as possible and combines it through cognitive feedback cycles. We propose such an approach, which jointly estimates camera position, stereo depth, object detection, and tracking. The interplay between those components is represented by a graphical model. Since the model has to incorporate object-object interactions and temporal links to past frames, direct inference is intractable. We therefore propose a two-stage procedure: for each frame we first solve a simplified version of the model (disregarding interactions and temporal continuity) to estimate the scene geometry and an overcomplete set of object detections. Conditioned on these results, we then address object interactions, tracking, and prediction in a second step. The approach is experimentally evaluated on several long and difficult video sequences from busy inner-city locations. Our results show that the proposed integration makes it possible to deliver robust tracking performance in scenes of realistic complexity.

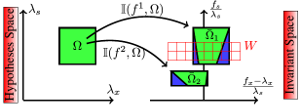

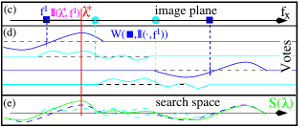

PRISM: PRincipled Implicit Shape Model

This paper addresses the problem of object detection by means of the Generalised Hough transform paradigm. The Implicit Shape Model (ISM) is a well-known approach based on this idea. It made this paradigm popular and has been adopted many times. Although the algorithm exhibits robust detection performance, its description, i.e. its probabilistic model, involves arguments which are unsatisfactory from a probabilistic standpoint. We propose a framework which overcomes these problems and gives a sound justification to the voting procedure. Furthermore, our framework allows for a formal understanding of the heuristic of soft-matching commonly used in visual vocabulary systems. We show that it is sufficient to use soft-matching during learning only and to perform fast nearest neighbour matching at recognition time (where speed is of prime importance). Our implementation is based on Gaussian Mixture Models (instead of kernel density estimators as with ISM) which lead to a fast gradient-based object detector.

Shape-from-Recognition: Recognition Enables Meta-Data Transfer

Low-level cues in an image not only allow to infer higher-level information like the presence of an object, but the inverse is also true. Category-level object recognition has now reached a level of maturity and accuracy that allows to successfully feed back its output to other processes. This is what we refer to as cognitive feedback. In this paper, we study one particular form of cognitive feedback, where the ability to recognize objects of a given category is exploited to infer different kinds of meta-data annotations for images of previously unseen object instances, in particular information on 3D shape. Meta-data can be discrete, real- or vector-valued. Our approach builds on the Implicit Shape Model of Leibe and Schiele [1], and extends it to transfer annotations from training images to test images. We focus on the inference of approximative 3D shape information about objects in a single 2D image. In experiments, we illustrate how our method can infer depth maps, surface normals and part labels for previously unseen object instances.

Moving Obstacle Detection in Highly Dynamic Scenes

We address the problem of vision-based multi-person tracking in busy pedestrian zones using a stereo rig mounted on a mobile platform. Specifically, we are interested in the application of such a system for supporting path planning algorithms in the avoidance of dynamic obstacles. The complexity of the problem calls for an integrated solution, which extracts as much visual information as possible and combines it through cognitive feedback. We propose such an approach, which jointly estimates camera position, stereo depth, object detections, and trajectories based only on visual information. The interplay between these components is represented in a graphical model. For each frame, we first estimate the ground surface together with a set of object detections. Based on these results, we then address object interactions and estimate trajectories. Finally, we employ the tracking results to predict future motion for dynamic objects and fuse this information with a static occupancy map estimated from dense stereo. The approach is experimentally evaluated on several long and challenging video sequences from busy inner-city locations recorded with different mobile setups. The results show that the proposed integration makes stable tracking and motion prediction possible, and thereby enables path planning in complex and highly dynamic scenes.

In-hand Scanning with Online Loop Closure

We present a complete 3D in-hand scanning system that allows users to scan objects by simply turning them freely in front of a real-time 3D range scanner. The 3D object model is reconstructed online as a point cloud by registering and integrating the incoming 3D patches with the online 3D model. The accumulation of registration errors leads to the well-known loop closure problem. We address this issue already during the scanning session by distorting the object as rigidly as possible. Scanning errors are removed by explicitly handling outliers. As a result of our proposed online modeling and error handling procedure, the online model is of sufficiently high quality to serve as the final model. Thus, no additional post-processing is required which might lead to artifacts in the model reconstruction. We demonstrate our approach on several difficult real-world objects and quantitatively evaluate the resulting modeling accuracy.

Markovian Tracking-by-Detection from a Single, Uncalibrated Camera

We present an algorithm for multi-person tracking-by-detection in a particle filtering framework. To address the unreliability of current state-of-the-art object detectors, our algorithm tightly couples object detection, classification, and tracking components. Instead of relying only on the final, sparse output from a detector, we additionally employ its continuous intermediate output to impart our approach with more flexibility to handle difficult situations. The resulting algorithm robustly tracks a variable number of dynamically moving persons in complex scenes with occlusions. The approach does not rely on background modeling and is based only on 2D information from a single camera, not requiring any camera or ground plane calibration. We evaluate the algorithm on the PETS’09 tracking dataset and discuss the importance of the different algorithm components to robustly handle difficult situations.

Improved Multi-Person Tracking with Active Occlusion Handling

We address the problem of vision-based multi-person tracking in busy inner-city locations using a stereo rig mounted on a mobile platform. Specifically, we are interested in the application of such a system for autonomous navigation and path planning. In such a scenario, semantic information about the moving scene objects becomes important. In order to estimate this robustly, we combine classical geometric world mapping with multi-person detection and tracking. In this paper, we refine an approach presented in earlier work, which jointly estimates camera position, stereo depth, object detections, and trajectories based only on visual information. We analyze the influence of the trajectory generator, which forms part of any tracking-by-detection system, and propose a set of measures to improve its performance. The extensions are experimentally evaluated on challenging, realistic video sequences recorded at busy inner-city locations. The results show that the proposed extensions significantly improve overall system performance, making the resulting detecting and tracking capabilities an interesting component of future navigation system for highly dynamic scenes.

Previous Year (2008)