Publications

3D Object Recognition from Range Images using Local Feature Histograms

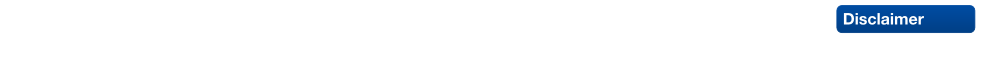

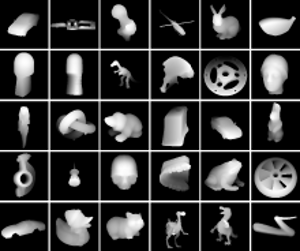

This paper explores a view-based approach to recognize free-form objects in range images. We are using a set of local features that are easy to calculate and robust to partial occlusions. By combining those features in a multidimensional histogram, we can obtain highly discriminant classifiers without the need for segmentation. Recognition is performed using either histogram matching or a probabilistic recognition algorithm. We compare the performance of both methods in the presence of occlusions and test the system on a database of almost 2000 full-sphere views of 30 free-form objects. The system achieves a recognition accuracy above 93% on ideal images, and of 89% with 20% occlusion.

@inproceedings{hetzel20013d,

title={3D Object Recognition from Range Images using Local Feature Histograms}},

author={{Hetzel, G{\"u}nter and Leibe, Bastian and Levi, Paul and Schiele, Bernt}},

booktitle={{Computer Vision and Pattern Recognition, 2001. CVPR 2001. Proceedings of the 2001 IEEE Computer Society Conference on}},

volume={2},

pages={II--394},

year={2001},

organization={IEEE}

}

Local Feature Histograms for Object Recognition from Range Images

In this paper, we explore the use of local feature histograms for view-based recognition of free-form objects from range images. Our approach uses a set of local features that are easy to calculate and robust to partial occlusions. By combining them in a multidimensional histogram, we can obtain highly discriminative classi ers without having to solve a segmentation problem. The system achieves above 91% recognition accuracy on a database of almost 2000 full-sphere views of 30 free-form objects, with only minimal space requirements. In addition, since it only requires the calculation of very simple features, it is ex- tremely fast and can achieve real-time recognition performance.

@article{leibe2001local,

title={Local feature histograms for object recognition from range images},

author={Leibe, Bastian and Hetzel, G{\"u}nter and Levi, Paul},

year={2001}

}

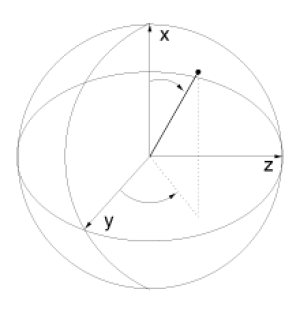

Integration of Wireless Gesture Tracking, Object Tracking, and 3D Reconstruction in the Perceptive Workbench

The Perceptive Workbench endeavors to create a spontaneous and unimpeded interface between the physical and virtual worlds. Its vision-based methods for interaction constitute an alternative to wired input devices and tethered tracking. Objects are recognized and tracked when placed on the display surface. By using multiple infrared light sources, the object’s 3D shape can be captured and inserted into the virtual interface. This ability permits spontaneity since either preloaded objects or those objects selected at run-time by the user can become physical icons. Integrated into the same vision-based interface is the ability to identify 3D hand position, pointing direction, and sweeping arm gestures. Such gestures can enhance selection, manipulation, and navigation tasks. In previous publications, the Perceptive Workbench has demonstrated its utility for a variety of applications, including augmented reality gaming and terrain navigation. This paper will focus on the implementation and performance aspects and will introduce recent enhancements to the system.

@incollection{leibe2001integration,

title={{Integration of Wireless Gesture Tracking, Object Tracking, and 3D Reconstruction in the Perceptive Workbench}},

author={{Leibe, Bastian and Minnen, David and Weeks, Justin and Starner, Thad}},

booktitle={{Computer Vision Systems}},

pages={73--92},

year={2001},

publisher={Springer}

}

Previous Year (2000)