TAO-VOS

Overview

We annotated 126 validation sequences of the Tracking Any Object (TAO) dataset with segmentation masks for video object segmentation. Additionally, we annotated all 500 training sequences semi-automatically while ensuring a high quality (for details see paper below).

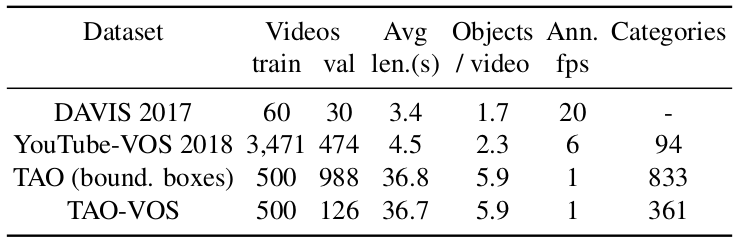

Compared to existing VOS datasets, sequences in TAO-VOS are significantly longer, cover more objects per sequence, and cover more different classes:

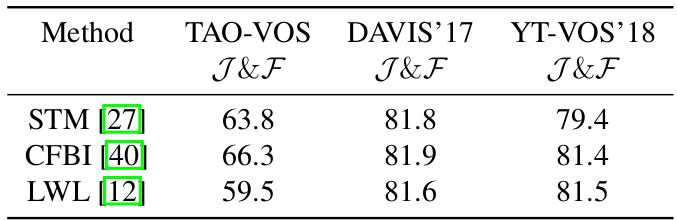

Performance on DAVIS and YouTube-VOS saturates, but not on TAO-VOS, which remains challenging:

Note that both TAO and TAO-VOS are only annotated at 1FPS. The annotations and qualitative results are visualized in the following video:

Downloads

Note that in order to further increase the quality of the training annotations, we added additional manually annotated masks for the training set (these additional masks were not used in the paper but are included in the benchmark release).

- For the complete package containing images and masks, please visit the MOTChallenge website

-

Mask annotations without images can be downloaded from here

-

If you need all intermediate frames (at a higher frame-rate), these can be obtained from TAO Github download instructions and/or TAO MOTChallenge download page.

Paper

Reducing the Annotation Effort for Video Object Segmentation Datasets Paul Voigtlaender, Lishu Luo, Chun Yuan, Yong Jiang, Bastian Leibe Accepted at WACV 2021

Citation

If you use this benchmark, please cite

@inproceedings{Voigtlaender21WACV,

title={Reducing the Annotation Effort for Video Object Segmentation Datasets},

author={Paul Voigtlaender and Lishu Luo and Chun Yuan and Yong Jiang and Bastian Leibe},

booktitle={WACV},

year={2021}

}

and also the original TAO paper

@inproceedings{Dave20ECCV,

title={TAO: A Large-Scale Benchmark for Tracking Any Object},

author={Achal Dave and Tarasha Khurana and Pavel Tokmakov and Cordelia Schmid and Deva Ramanan},

booktitle={ECCV},

year={2020}

}

Acknowledgements

We would like to thank the creators of the original datasets on which TAO-VOS is based: TAO, Charades, LaSOT, ArgoVerse, AVA, YFCC100M, BDD-100K, and HACS.

Contact

If you have questions, please contact Paul Voigtlaender via voigtlaender@vision.rwth-aachen.de